Artificial Intelligence (AI) entered the public zeitgeist at the launch of ChatGPT toward the end of 2022 (despite machine learning and AI research having been ongoing for decades). AI is an extraordinarily broad topic that means many things to many people—with conversations ranging from having it take over basic machine learning tasks to support (or replace) workers to advanced systems that can manage our increasingly interconnected digital society at inhuman speeds. This blog will not add to the growing list of predictions forecasting massive job loss, nor will it discuss the potential of a new utopia (or dystopia) just over the horizon.

This blog follows our recently posted piece from FortiGuard Labs, titled “A Tentative Step Towards Artificial General Intelligence with an Offensive Security Mindset,” which looked at the potential impact of AI on cybersecurity. It examines how security practitioners can leverage AI as a practical tool to take on specific tasks, thereby creating time for IT teams to investigate more pressing or interesting topics.

For this blog, I look at the large language model (LLM)-based examples of AI that have recently become generally available (e.g., ChatGPT, Google Bard, Microsoft Bing AI). Of course, AI is more than chatbots. However, they do make it very easy to demonstrate my point.

A Day in the Life

As a Senior Threat Intelligence Engineer at Fortinet, my job requires me to search for, reverse engineer, and blog about malware. Each function requires multiple steps (sometimes unique, depending on the topic). And at times, many of these steps can be time-consuming. Enter automation.

Automation is one of the security industry’s holy grails. It allows data to be collected and correlated, threats or vulnerabilities to be identified, and a fully coordinated response to happen before a malicious actor can complete their objective. And in many cases, tools exist to enable this activity, such as Security Automation and Orchestration (SOAR) solutions. However, there are places and scenarios where this sort of technology is not available or practical, especially on a smaller scale. AI can help in some of these circumstances.

I’ll share three examples of where I’m actively using AI (specifically, ChatGPT) to save time in my everyday work stack.

YARA

One of the most common tasks in my role is to create YARA rules to help me search for interesting malware samples that I can reverse engineer and then blog about. YARA is an open-source, multi-platform tool that uses strings and binary pattern matching to aid in the identification and classification of malware. As you can imagine, writing such rules requires carefully thinking about what should be searched for. In many cases, this involves using terms that are current, especially items in the news that could be readily adapted to file names and e-mail lures that will attract people to click on them.

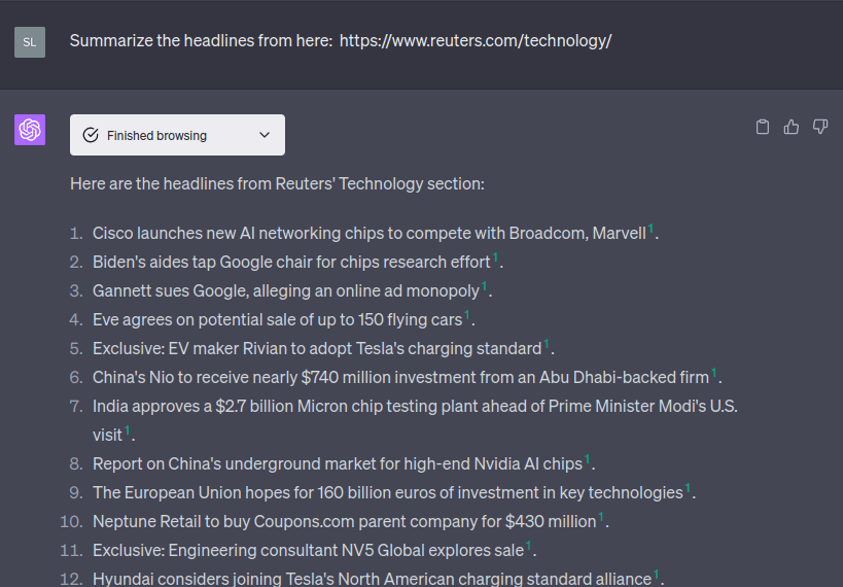

To illustrate this, let’s use the Reuters technology news homepage as an example. A search usually involves scanning the site for stories, distilling keywords or phrases from them, and then adding them to a YARA rule. With internet access and browsing capability, AI can shortcut this process. Rather than searching manually, I can direct the AI to summarize an entire news page:

The distilled information it provides makes the usefulness of the chat model readily apparent. Once this search is completed, I can direct the AI to take the story summaries and create keywords and phrases.